Smoking Hot Data Pipes

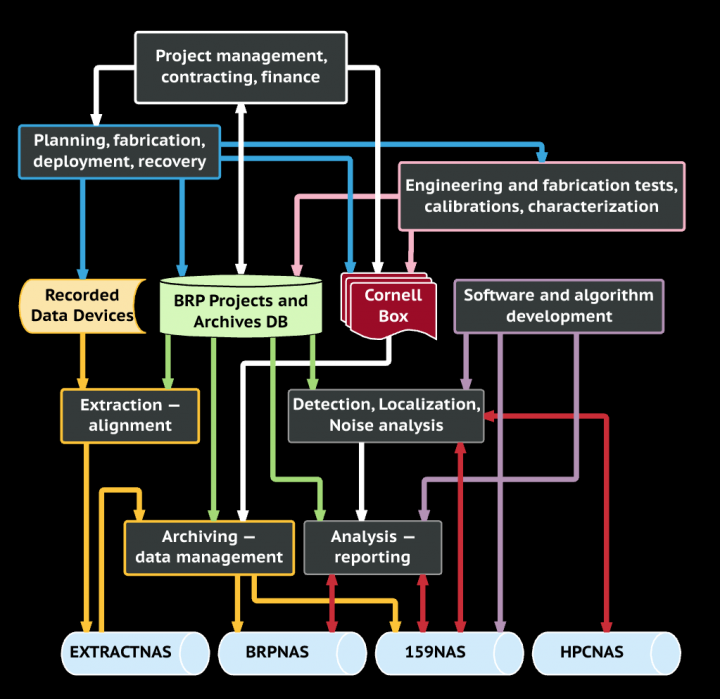

One of the biggest challenges that the Bioacoustics Research Program faces is moving the huge amounts of data that we collect through our processing pipeline. The sidebar lists some of the data sources we currently employ. Soon, we will start deploying our own very high-frequency Rockhoppers in the Gulf of Mexico. Increased size of output data is directly correlated with increased sampling rates.

When the data comes home to the Lab, it needs to be pre-processed in a variety of ways before analysis can begin. This usually leads to the generation of even more data, such as time-compensated files, in which multiple recording streams are aligned to each other, then output as new multi-channel files, or, decimated files, in which high-frequency recordings are resampled to lower frequency for certain types of analysis. However, we also do high-frequency analyses, so nothing ever goes away!

Next, analysis begins, and even more data is created. Our biggest product has always been noise analysis, that is, the process of mathematically and graphically describing the energy distribution of anthropogenic and natural sound sources in frequency bands relevant to the animals we study. Such sources range from the sounds of airplanes or highways to ship noise, seismic survey blasts, and even the occasional earth or ice-quake (depending on where we are recording). In the past, noise analysis output was roughly equal in size to the audio input (so we doubled our data size yet again!), but through some cleverness, in recent years we have re-scaled the output to about 25% of the input data size, without loss of resolution or accuracy.

Not only do we need to process all this data, but we feel compelled to archive it for future generations. Because we typically record continuously at our sites, we are capturing long-term soundscapes that may be useful for re-analysis. For example, for years, we thought we were recording whales on the Atlantic seaboard. When Dr. Aaron Rice joined BRP, he—a fish biologist—said “we should go back and analyze some of those recordings for fish choruses.” And as a result, BRP was able to generate new scientific papers from the same data. Likewise, species that were not focal in the original analyses, such as minke whales, are now being found in our past recordings using new detectors running on our high-performance computers, and these new findings will result in even more publications.

ChrisP connecting the dots between data and seamless access.

The server system providing fast access to large scale data sets.

Currently, we put our active data on a 200-terabyte network attached storage device, or in geek-speak, a 200-TB NAS, or, in English, a big disk that we can all see and share. As data manager, my job is to make sure that safe archival copies of incoming and derived data are moved to other media as well. My motto is: never trust anything plugged in and spinning at 7200 RPM! We also employ another 100-TB, a 96-TB, a 32-TB, and an 18-TB NAS for specialized uses. In sum, we use nearly half a petabyte of spinning disk!

We use hard drives—hundreds of hard drives—as big as 6 TB per drive. My finger is trembling over the BUY button for the new 12 TB drives on the market. I also write copies to high-capacity LTO tapes (2.5 TB each). The Lab as a whole is moving to a new deep storage plan that will upload all our data to Amazon’s cloud servers, which will provide a safe geographic distance if a disaster like a building fire were to occur. When that is in place, the need for tapes will diminish, and eventually, even the hard drive archive will become redundant when any data can be recalled quickly for re-analysis.

Moving all this data around takes unbelievable amounts of time. When I started 9 years ago, 40 MB/s was a good copy speed. But that was failing to keep up with higher sample rates and faster analyses. So last year, I developed a plan, and this year, with Holger’s blessing and support and huge help from the Lab of Ornithology’s IT system manager, Justin Kintzele, we executed an upgrade of BRP’s internal network from 1 Gbps to 10 Gbps, using a “dark” wiring system that was put in place by one of our former engineers with great foresight, back when the Johnson Center was being constructed. Now, on a good day, I see transfer rates of 4.5-5 Gbps (14 times faster than the old days) when I am running multiple jobs on my servers, moving or compressing or archiving data from here to there, or over there, or back again when the scientists call for it.

All the analysts and the high-performance compute servers now have shared and very fast access to the central NAS, and I have blazing access to the archival media systems at the same time. Smoking pipes indeed!